When you get access to LSEG Workspace it may be that your account does not cover all options for the Excel addin. Typically, for student accounts, the Datastream part will not work when you try to use this. This may be inconvenient when you want to do an event study since you do not have the option of a Request Table download. This Datastream Excel addin feature was built to do downloads of this kind.

The main feature that is available for time series downloads (in the Excel addin) is the Build formula button.

The main drawback of this feature, is, that it is not directly possible to do downloads for multiple equities for multiple time windows, in one go. Using this feature and changing some Workspace codes, however, will allow you to still to targeted downloads for specific varying windows.

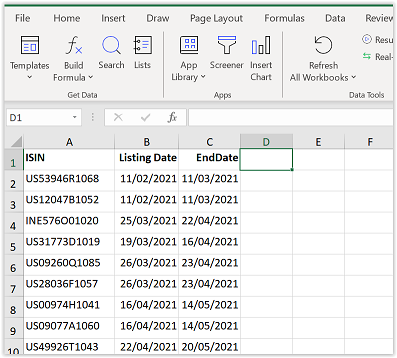

Step 1) Gather data on events with beginning and end dates

In this example I use ISIN codes. It is also possible to use RIC Codes (proprietary Reuters Identification Codes), Sedol codes and CUSIP 9 codes.

Step 2) Do a time series download for the first event in the list

Use the Build Formula option for this. Make sure to use to change the layout to download the data transposed. In this example I choose to download price data. The formula now looks as follows:

=@RDP.Data($A$2;”TR.PriceClose”;”Frq=D SDate=#1 EDate=#2 RH=IN;Fd CODE=ISIN”;E2;$B$2;$C$2)

Step 3) Change the Workspace formula in Excel

This will allow you to copy the formula downward for the other events. The main thing, is, to remove the dollar signs in the formula to avoid absloute reference points. It should look as follows:

=@RDP.Data(A2;”TR.PriceClose”;”Frq=D SDate=#1 EDate=#2 RH=IN;Fd CODE=ISIN”;E2;B2;C2)

Step 4) Copy the formula down for all events

Step 5) Use the button Refresh Worksheet

This option is visible when you change the default button in the Workspace ribbon. The default option is “Refresh all Workbooks”. By clicking the arrow below this you can change it to “Worksheet”. This is to avoid re-downloading data in other worksheets in Excel. The result should look as follows:

Short example movie that shows all the steps in sequence:

Filed under: Datastream, Excel, LSEG Workspace | Comments Off on Workspace Excel addin and events

Today I have finished testing a first version of a Python script that is created to process

Today I have finished testing a first version of a Python script that is created to process  The Intercontinental Exchange (

The Intercontinental Exchange ( The database TruCost ESG has data on many different aspects related to Environment, Social, and Governance for both listed and unlisted companies. Historical data availability depends on the type of subscribed TruCost licence that an organization has paid for.

The database TruCost ESG has data on many different aspects related to Environment, Social, and Governance for both listed and unlisted companies. Historical data availability depends on the type of subscribed TruCost licence that an organization has paid for.

WRDS is a company that offers a platform to use many financial and economic databases. Examples are: CRSP, Compustat, NYSE intraday data and Audit Analytics. Recently it was announced (quite suddenly) that people who use the Wharton Research Data Services accounts for the WRDS platform, will be required to change the way they get access. WRDS account users will need to use either an App from

WRDS is a company that offers a platform to use many financial and economic databases. Examples are: CRSP, Compustat, NYSE intraday data and Audit Analytics. Recently it was announced (quite suddenly) that people who use the Wharton Research Data Services accounts for the WRDS platform, will be required to change the way they get access. WRDS account users will need to use either an App from